Speech2Text for Med

research areas

timeframe

2025 - 2025

contact

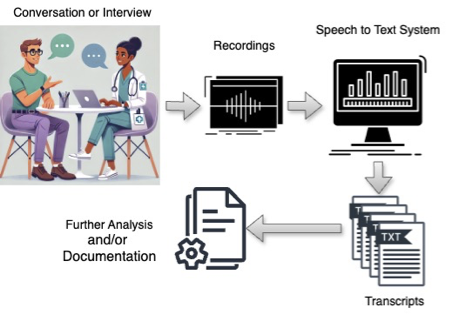

tilia.ellendorff@uzh.chBackground: Speech-to-Text systems have made enormous progress with recent transformer technology, for instance Open AI Whisper. Are they so reliable already that they can be used to recognize strong dialects, such as Swiss German, German by Language Learners, and medical terminology? Can they detect pragmatic features like hesitation and false starts?

In the Swiss context, however, spoken language:

- Typically includes Swiss German which is still a challenge to current off-the-shelf systems.

- Patients may have a non-native linguistic background which can impact the performance of speech-to-text systems.

- For medical applications, it is important to know how well the systems manage to recognize medical terminology (e.g. medication, anatomy, diagnoses), and

- pragmatic factors like pauses, hesitation markers and repetitions. These are important features, for example for the diagnoses of several diseases (e.g. neurogenerative disorders) but can also be markers of distress.

Aim and Goals

Speech2Text for Med aims to evaluate state-of-the-art speech-to-text pipelines for the transcription of spoken language in the medical domain. This project collaborates with Talk2UZH, with the intent of including the system there.

We strive to gain a detailed understanding of how well speech-to-text works with Swiss German, and in the medical sector.

Relevance

We have had several requests for such a system by Psychiatry, Ethics, and Language & Health. We envisage this research to be seed-funding.

Methods

We apply the latest transformer models, such as Open AI Whisper and conduct a detailed evaluation. In addition to word error rates on Swiss German vs. High German and vs. Learner Language we will measure performance on medical terms, recognition of pragmatic markers. As fare as available we will correlate metadata, such age, gender, cognitive impairment, learner level, and whether external vocabulary lists can improve the results.

Timeline:

- WP1: Assessment of SOTA approaches (February 2025)

- WP2: Data Collection: we ask workshop participants and experts to furnish audio recordings, e.g. interviews (March 2025)

- WP3: Evaluation and Annotation as shared effort leading to the Workshop (May 2025)

- WP4: start to apply for a larger grant with re-training (June 2025)

- WP 5:synchronisation with Talk2UZH (September 2025)